Vestibular VR: 5 Features to include in your Metaverse to make it more accessible

Originally Published on LinkedIn: Article Source

Hey everyone, and welcome to my deep dive into all things VR/Metaverse design and development! I had a bit of fun while creating the teaser video related to this topic - but we mustn't overlook just how vital accessibility is regarding virtual reality experiences.

Now that I captured your attention, let's learn more about what accessibility looks like when designing with Virtual Reality users at the forefront.

Here are five key ways your team can keep including those with disabilities front-of-mind for improved games & Metaverses ahead!

Virtual reality (VR) is a form of immersive technology that offers users an unparalleled experience by combining the physical movement of their bodies with the virtual environment. As VR technology improves, more developers and designers are creating in the Metaverse—the universe of interconnected virtual worlds—for users to explore and experience. However, for the user to have the most immersive experience possible, certain features need to be included in the Metaverse. Let’s take a look at five tips that should be included in your Metaverse to make it more accessible.

1. Movement Response Controls

Responsive Locomotion System

A responsive locomotion system is essential for any VR experience; it allows typical users to move around freely and explore their environment without getting motion sickness or having difficulty navigating their surroundings. When designing your Metaverse, make sure to include a locomotion system that is both intuitive and easy to use so that users can move around quickly and comfortably while exploring your virtual world.

While some motion tracking and responsiveness are controlled at the headset and hardware level. On the software design level, it is the designer and developer's responsibility to translate that movement into the experience in the virtual world.

This is where the experience can go south for a person with vestibular issues. How I experience movement and how my brain processes movement is altered, so while the default calibration might be accurate for a person without vestibular system damage, it's unlikely that this default calibration will be suitable for me.

Tip #1:

Giving the user the ability to fine-tune this calibration to make it feel most comfortable for them, would be a huge feature enhancement allowing more people the ability to enjoy your virtual world.

Head Tracking Technology

Head tracking technology allows users to move their heads naturally within their environment without feeling disoriented or uncomfortable. This feature also enables them to interact with objects within the virtual world using their head movements, giving them greater control over what they're doing in the virtual space.

Here is another great example of how I would benefit from some adjustments and calibration options. As a person with vestibular dysfunction, I intentionally guard movements, and these movements are guarded on a spectrum meaning all days are not created equal. Much like any chronic condition you have good days and bad days, good weeks and bad weeks. So my guarding can range depending on how I am physically feeling. It's very common for someone with my condition to limit head movements and sudden head movements. Here is where I might want to calibrate my subtle movement to represent a full left-head turn, or not depending on the day.

People with BPPV or PPPV will often avoid tilting their head in the direction you would when looking up, this can quickly trigger intense vertigo spins.

Tip #2:

Giving the user the ability to calibrate head movements and tracking to their preference, abilities, and range of motion would be extremely valuable.

2. Depth of Field & Parallax & Realism

Physics Simulation

Physics simulation helps create realistic interactions between objects within the environment by simulating real-world physics on a smaller scale within your Metaverse's boundaries. This feature adds a sense of realism by allowing objects such as cars or characters to interact with each other as they would in the real world when they come into contact with one another—allowing users to have a more authentic experience while exploring your Metaverse.

So while many designers and developers strive for simulating real-world physics, the more realistic a virtual environment is, the more it can create challenges for those with vestibular dysfunction.

Why? Well, I'm not a doctor or a physicist (although I am related to some very famous and notable physicists... story for another article perhaps) I can assume the following reason for why the more realistic 3D environments produce more challenges.

While the vestibular system is technically part of your inner ear, the dysfunction is neurological. That means that the brain is struggling to process the data it's receiving and in a hyper-realistic, very 3D, and likely sensory-heavy virtual space the brain is working extra hard to process this experience.

So here is a case where less is more, I have fewer triggering situations in lower fidelity virtual spaces, with less intense depth of field (items in the distance are blurred) and less parallax style effect (objects in the distance move at a slower rate than objects nearby). While these are the elements to produce a more realistic virtual experience, they also create a more problematic one.

Tip #3:

If the user had the option to reduce the depth of field, flatten depth and reduce realism as an option, the experience could be more accessible and inclusive.

3. Realistic Environment Stimuli

For users to feel fully immersed in your Metaverse, you must provide them with realistic environmental stimuli such as lighting, sound, and textures. Lighting can set the mood for each area within your Metaverse; sound can evoke emotions; and textures can give a sense of realism. Utilizing these elements will help create an engaging environment for users to explore and enjoy. Except this is not always the case for people with vestibular issues or sensory disabilities. I'm going to segment the environment into 2 core areas; community-driven, meaning where you experience other users in real-time) and world-centric, meaning those environmental elements that are part of the world or games core experience regardless of 3rd party involvement.

Community-driven Stimuli Control

While a huge part of the appeal and experience of the Metaverse is the community aspect, the more "people" in space moving about and interacting the more environmental noise this produces.

Let's take it out of the virtual world for a second, imagine walking down the street and suddenly someone jumps out from an alley behind you and yells loudly. This would be a very startling experience, and besides possibly making you "jump" this type of experience could cause you to turn your head quickly and abruptly. This will make a person with vestibular dysfunction fall to the ground, in the real world. The same applies in a virtual world, so limiting the intensity and abruptness of what other users can do (as a setting) would be critical to ensure the safety of a vestibular user.

Secondly think about the scenario of being in a crowded room, with a lot of people talking, and moving around. This is an environment in the real world that people like myself often avoid, so it's important to ensure we can avoid these types of experiences in the virtual world as well.

One technique I like is using the concept of focus or safe zones, meaning any other users in the space that are not within my direct interaction zone can be "turned down". Perhaps they are blurred or dulled, their volume is muted, and so on.

World-centric Environmental Stimuli

This is a continuation of the previous concept but in this case, it's central to the world experience itself. This one might feel more controversial because there is so much time and energy that goes into the design of the world and how each element is experienced. I will go back to a real-world example and then compare it to a game I know of that mimics this type of environment.

Any type of repeating visual can be difficult for my brain to process, driving through a tunnel with repeating lights, over a bridge with repeating trusses, or even just on the highway at night with the constant headlights coming towards and passing me can cause my brain to misfire.

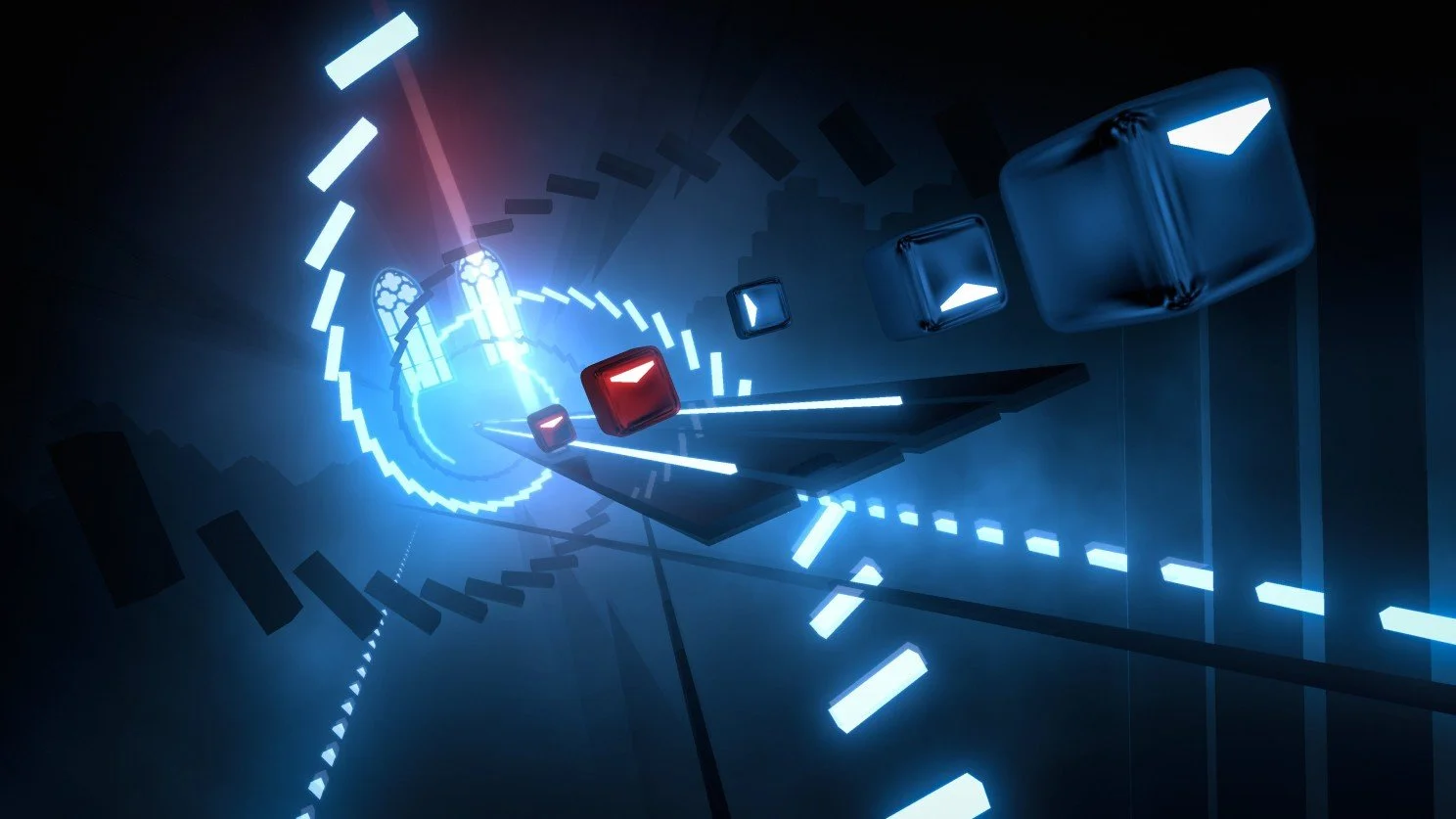

Screenshot of BeatSaber Game

Now think about a VR game like Beat Saber... Oh, now you understand what game I was pretending to play in my teaser video! The visual plus sound experience of a game like Beat Saber is a hard stop for me. I can't do it, it's like taking all my awful real-world triggers and putting them into a virtual reality nightmare on steroids. So while that example is more extreme, and that experience is pretty core to the game or world experience, these are the type of environments you must understand are not friendly spaces for us vestibular folks.

Since the vestibular system is driven by visual data, plus sound data, plus proprioception data we have to carefully consider the use of visual and sound data in virtual environments. And while haptics is gaining a presence, there is a reality that the element of feeling will always be more limited in virtual experiences.

Tip #4

Allowing the user to control how much environmental stimuli they want to experience can be a huge feature for many types of disabilities, not just vestibular.

POV Adjustments & Lag Time

Fluid Body Movement Tracking

Fluid body movement tracking allows users to move naturally within their environment without feeling restricted or limited by their movements being tracked inaccurately or too slowly by the system. This feature helps ensure that all movements are tracked accurately and fluidly so that users don't feel like they're being held back from exploring every nook and cranny of your metaverse due to lag time or inaccurate tracking from the system itself. Now much like head tracking, this is where typical users and vestibular users may differ. Some people living with vestibular dysfunction live with real-world lag time. This can be physical or neurological.

For me, it's physical and presents as a problem with eye tracking. My affected side eye doesn't always track in sync with my normal side. So following a moving object across the screen is inherently challenging for me. This is part of the diagnostic tests they use when measuring the degree or severity of a person's vestibular damage. For other people, their eyes might move together but their brain isn't processing the information in sync. Either scenario can result in feelings of strain, disorientation, exhaustion, and more.

Now you can't adjust this for a user in the way you can in some of the previous ideas mentioned. But what you can do and what I have heard from my colleague and fellow vestibular warrior Nat Tarnoff is how helpful having the adjustment on the Point-of-view (POV) can be. This was a fascinating conversation because they expressed how much better of an experience and how much longer they can enjoy the experience when they can play from a birds-eye view or sometimes referred to as a "god view". This made total sense to me! While I understand the appeal of first-person POV in immersive games, having the option to toggle from that POV to a birds-eye view immediately removes the brain's need to process the experience in the same way.

So for my final tip and in my opinion an ultimate vestibular hack to making virtual reality and the Metaverse more accessible and inclusive for people with vestibular challenges...

Tip #5:

With a major shout of credit to my accessibility expert and vestibular colleague Nat) Give the user the option to change their point-of-view entirely and experience the world from angles besides the default first-person view.

In Conclusion:

Virtual reality has transformed how people interact with technology by allowing them to become fully immersed in digital worlds through physical movement tracking systems and responsive environments created through physics simulations and realistic environmental stimuli such as lighting, sound, textures, etc. By including these five tips in your design plan when creating a new Metaverse you create an accessible space where more people can explore safely while having fun!